Rethinking GenAI and Business Intelligence

Generative AI could revolutionize the business intelligence (BI) landscape, but traditional BI vendors are looking to incorporate the technology in existing workflows in paradigms. We recently spelled out how GenAI might influence the development of BI platforms. So far, traditional BI companies have leaned toward familiar methods like natural language interfaces and chatbots, missing the innovative potential GenAI brings for analytics development and accessibility to non-technical users.

What’s all the hubbub with GenAI?

GenAI is a type of artificial intelligence that is capable of generating text, images, or other media by leveraging a generative model. A generative model, in this case, refers to large language models (LLMs) like OpenAI’s GPT, Google’s PaLM, and Meta’s LLaMa.

LLMs have been trained on a vast amount of text data (in the case of OpenAI’s latest model, GPT-4, 1.76 trillion parameters). LLMs analyze a text prompt to understand the context of what you’re asking, and use their training to generate a response by predicting the most likely words and sentences that would come next. In the case of ChatGPT, the most popular GenAI-powered chatbot, a user can ask a question and receive an answer in natural language. A user can even iterate on their question and ChatGPT will keep the context of the entire conversation.

GPT is by far the most popular of the LLMs available on the market today. It’s been one year since the launch of ChatGPT, and according to OpenAI, over 100 million people use the service in a single week. OpenAI also claims that over 2 million developers are working with GPT. The hype around the service is incredibly high, and the practical implications of developers leveraging LLMs like GPT are just beginning to surface.

Investors are paying attention to generative AI start-ups like never before. As of August 2023, according to CB Insights, there have been 86 deals with $14.1 billion in funding in 2023 compared to just $2.5 billion in funding in 2022. Anthropic, an LLM developer with former employees of OpenAI, has received $850 million, while another LLM developer, Cohere, has received $270 million.

The hype isn’t just talk. Investors are making big bets in generative AI-focused start-ups.

In the BI world, there’s a number of companies taking, for lack of a better term, the chatbot approach. Microsoft’s Copilot, which is integrated into Power BI, has probably been the most headline-grabbing of all the tools. Copilot in Power BI, which is still in beta, is promising a lot. Copilot provides a conversational interface to not just create narrative summaries and generate code from text, but also wholesale-build reports based on questions. Tableau’s Einstein Copilot conversational AI product similarly has a chatbot interface allowing you to ask questions and receive a narrative summary.

Other companies have been incorporating generative AI in different ways. ThoughtSpot took a natural language search interface, slapped “generated by AI” on the interface, and seemingly called it a day while saying it’s now powered by GPT under the hood. The results, in that case, have been underwhelming. Pyramid Analytics has similarly launched a generative AI-powered natural language to code interface, and users can also incorporate data from ChatGPT with a prompt-based data generator leveraging GPT’s large library of publicly available data sets.

With these new product capabilities, it’s interesting to note who these vendors are targeting with this functionality. If you go to Tableau AI’s product page, you’ll see a big “Empower business users” headline. In the case of ThoughtSpot, there’s a big headline reading “Ask questions like a business leader, get insights like an analyst.” It’s clear the general assumption is that generative AI can help to extend the use of analytics from data teams to business users and executives. The idea is to make it easier for non-technical personas to start incorporating data-driven decision-making into their workflows. It’s been a dream that’s a long time coming, and GPT makes it even more in reach than ever.

The problem with the current approach

On its face, this new generative AI functionality seems to address a number of issues with BI tools. The reality is that these generative AI approaches are going to pose major problems for many organizations trying to extend data analytics to non-technical business users. There are many underlying problems with LLMs that, if not addressed, can cause massive headaches for organizations and, in particular, large enterprises looking to incorporate the technology into their stack.

Hallucinations

LLMs are known to hallucinate. A hallucination means that the LLM has generated text that is incorrect or nonsensical given the prompt entered. LLMs hallucinate because they are trying to extrapolate an answer based on the prompt you provided. They are not directly trying to answer your question—rather, they are trying to provide a response that best matches the prompt you provided.

GPT models are designed to recognize and generate patterns in the data they’ve been trained on. A model’s aim is to predict the next word in a sentence based on the context of the words that came before it. When faced with incomplete or ambiguous prompts, GPT models may fill in the blanks by generating hallucinations. Some researchers have suggested that these hallucinations happen up to 20% of the time with GPT-4. In addition, with the ability to keep the context of the conversation while using prompts, LLM hallucinations can snowball when users ask additional questions for a given prompt.

This is a major problem for analytics companies incorporating this technology into their products. It’s very easy to imagine leveraging a chatbot within your favorite business intelligence platform, receiving an answer based on a hallucination, and using the answer to make an “informed” business decision. This is specifically a problem for the non-technical personas who are being targeted for these new chatbot interfaces where they may not recognize that there is a problem with the data. If a Power BI business user generates a report based on a prompt, how is the user going to verify the accuracy of the response? If a business user can, at will, create new reports based on prompts, how are data teams going to keep track of the accurate reports and discard the inaccurate reports?

The goal of incorporating LLMs into analytics tools is, ostensibly, to extend access to data-driven decision-making to executives and business users, but in the end, it may wind up having the opposite effect if this situation is not addressed. It’s easy to imagine a scenario where an executive makes an adverse business decision based on information provided by an LLM interface and decides to take a step back from making an analytics investment. The goal of any data-based decision-making tool should be to provide trust, accuracy, and transparency when providing answers.

Transparency

Directly from ChatGPT:

“While it is a powerful tool for generating human-like text, the term ‘transparent’ may not be the most accurate description for GPT models.”

Closed LLMs are considered black boxes. LLMs are built on neural network architectures that are extremely complex. LLMs are often built on massive numbers of parameters—in the case of GPT-4, 1.76 trillion. Each parameter contributes to the model’s understanding of the data, which can make it very challenging to pinpoint the specific role each parameter has in its decision-making process. Put in a more simple way, from an API, developers can send a prompt and get a response, but a developer cannot interpret how the model got the response.

This would seemingly be a nightmare for any developer trying to incorporate LLMs into their workflows to augment decision-making. Without the ability to understand how an LLM generated a wrong response, it’s impossible to understand where the fault in the algorithm lies. As business intelligence companies begin adding LLM functionality into their products, they cannot effectively explain to their customers how the LLM answered the question. This should give every company looking to incorporate the technology pause.

Potential long-term costs

With many companies incorporating OpenAI’s GPT into their platform, costs associated with using the tool might begin to add up over the long term. Leveraging a business intelligence tool’s LLM integration has a cost associated with pulling information from the LLM (unless the company is running their own LLM, but even then, there’s compute costs associated).

This means that BI tool pricing may become inextricably linked to the cost of using the LLM. While add-on-type functionality (e.g., a chatbot) may not have a huge impact on the bottom line, some BI tools like ThoughtSpot have embedded LLMs into the main focus of their product, (e.g., natural language search), which has a huge potential cost implication in the future.

The risk of “enshittification,” otherwise known as platform decay, of an LLM like GPT is sky high. If you’re not familiar with enshittification, it was coined by Cory Doctorow of Wired and states, “Here is how platforms die: first, they are good to their users; then they abuse their users to make things better for their business customers; finally, they abuse those business customers to claw back all the value for themselves.”

The biggest example is X (formerly Twitter), but you can also see the phenomenon in action with Google Search, Reddit, Unity, and many more. The risk is especially high when a company like OpenAI is backed by a public company with an aggressive timeline to see a return on their investment.

Dated information

The power of GPT is that you are able to ask a question and receive an answer almost instantaneously—however, the limitation with GPT is that the information can be outdated. Up until recently, GPT-4 was trained on information available prior to the end of 2021. The most recent update to GPT-4 has provided information up until April 2023. This is still seven months out of date as of November 2023, and it’s only becoming more outdated.

A company like Pyramid Analytics, which is incorporating GPT as a means to add contextual data from public sources, cannot provide up-to-date data to their end users. Imagine a scenario where an end user is looking to understand data on an infectious disease that rose to prominence in June 2023 based on publicly available CDC data, but they cannot get a response from their prompts. The user is just wasting their time using the tool rather than going directly to the data’s source.

In addition, there’s a risk of an LLM-based chatbot missing context in their narrative analysis. If you want to understand why there’s a dip in sales in March 2020 and the LLM hasn’t been trained on data from that time period, then you’re going to get some wacky responses.

The Sam Altman problem

In the middle of November 2023, Sam Altman, the CEO of OpenAI, was dismissed by the board of directors. After a tumultuous few days, he was reinstated by the board as the CEO of the company. The reasons behind his dismissal were vague, but the intervening time provided some sobering moments for many companies. Altman was given a position on Microsoft’s internal AI team, and Kevin Scott, the CTO of Microsoft, seemingly offered a job to every employee of OpenAI.

Make no mistake—this week of turmoil at OpenAI exposed a lot of risk with leveraging the model as the backbone of a product. At one point, the vast majority of OpenAI’s staff threatened to quit if the board of directors was not dissolved. The 2 million developers incorporating GPT into their products need to come up with backup strategies for solving problems without GPT.

The answer is always Mario

Shigeru Miyamoto, creator of Super Mario Bros, Donkey Kong, the Legend of Zelda, and much more, once said, “A delayed game is eventually good, but a rushed game is forever bad.” This quote is something we need to take to heart in the business intelligence community.

LLMs are absolutely a game-changing technology that, when implemented properly, can expand the potential audience for analytics tools. However, when implemented in a haphazard, trend-chasing manner, they can be absolutely disastrous for businesses. Business intelligence and analytics companies need to understand the practical limitations of LLMs and design safeguards to ensure that users trust them to augment decision-making.

In addition, the initial approaches to incorporating this technology will be rooted in how we’ve previously thought about using analytics to inform decision-making. A complete rethink of how we might implement this truly game-changing technological solution is a necessity.

From the early 1970s until the mid 1990s, video games were 2D. Video games were set on a flat plane, and players moved the characters from left to right or up and down to accomplish an objective (reaching the flag pole or shooting alien invaders), with some exceptions. When 3D technology became widely available, you started to see implementations of the technology that were deeply rooted in the way games were designed in the past. Games like Pandemonium! and Crash Bandicoot were 3D-rendered games that had gameplay reminiscent of previous eras’ games (left-to-right, up-and-down movement). They weren’t really 3D games in the way we conceive 3D games today.

Then came Super Mario 64. Super Mario 64 was one of the first fully free-movement 3D games. The big innovation came with the way the camera moved, which was controlled by the player. In the game, the camera was conceptualized with a camera man (Lakitu) floating behind Mario. The player used the controller to move the camera around Mario to change the angle in a 3D environment. Super Mario 64 was also released with the Nintendo 64 as a launch game. The Nintendo 64 came with a controller that had the first widely available analog stick, allowing for a freedom of movement that wasn’t available on other consoles at the time.

3D gaming was mind-blowing at the time of its release, much like ChatGPT was a year ago. But the implementation of LLMs in analytics cannot be rooted in the ways of the past. The implementation of LLMs into BI tools must take into account the limitations of the technology. You must carefully consider how to incorporate the technology in meaningful ways that truly help to expand the reach of data-informed decision-making. Finally, workflows must be changed in order to accommodate how analytics are created to reflect the true power of LLMs.

For LLMs to truly succeed in opening analytics to non-technical and less data-literate audiences, analytics providers need to rethink how they are approaching current problems. Analytics must account for hallucinations by enabling oversight of reports and analysis generated by LLMs. A chain of accountability, as well as strict governance, must be put in place. This could take the form of a data analyst “seal of quality” (much like Nintendo’s seal of quality) on data products and dashboards.

In addition, analytics companies need to develop more streamlined workflows that take into account the power of LLMs. Early attempts have been to make chatbot interfaces, but if a non-technical user does not understand the data, it will be very difficult to ask meaningful questions of the data. Adding context to data via LLMs could be very powerful for many audiences at large organizations.

The Tellius difference

You may be curious why we have not touched on Tellius, given we recently launched a beta for our own Copilot tool. The Tellius implementation of GPT has been a bit different from that of our peers. Tellius Copilot includes the ability to translate natural language to SQL and Python like other peer platforms, and Tellius Copilot can also generate synonyms for metadata automatically. But Tellius is taking a more considered approach to leveraging LLMs in the platform. Each of the above functions includes “human in the loop” feedback, meaning any action taken by an LLM needs to be confirmed by a human prior to its execution.

Tellius also provides AI-powered Automated Insights and AutoML, which does not use LLM technology. This core functionality of Tellius exposes business-friendly interpretations of ML models to help expand data science activities to more members of an organization. Automated Insights and AutoML come with explainable AI, allowing users with no experience in predictive analytics to better understand how ML models are working.

The mission of Tellius is to augment analytics users’ experience with AI-powered tools, enabling data analysts, data engineers, and business users to do more with data. LLMs, like GPT, are one arrow in our quiver. Tellius has had natural language search since the very beginning of the company (in 2017), with improvements being made since then. We firmly believe our natural language experience is superior to anything available on the market today. This past year, we’ve been researching how LLMs could be used to augment our natural language search, and we’ll be providing updates on our development in the next few months.

With that in mind, Tellius will continue to expand on its mission and use LLMs in a responsible manner while incorporating feedback from our users to help achieve our goal of expanding data-driven decision-making throughout organizations.

If you’d like to learn more about Tellius and Tellius Copilot, please contact us for a demo today.

Get release updates delivered straight to your inbox.

No spam—we hate it as much as you do!

Related blog posts

Tool and strategies modern teams need to help their companies grow.

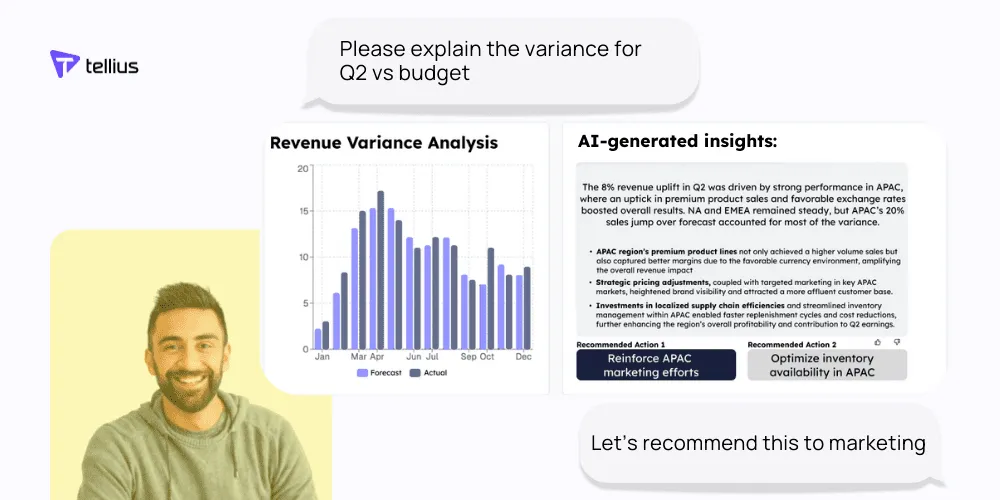

How AI Variance Analysis Transforms FP&A

Learn how AI-powered variance analysis is transforming how organizations uncover and act upon financial insights.

RevOps Intelligence Redefined: AI-Powered Agents Meet Unified Knowledge Layer

Learn how AI-powered variance analysis is transforming how organizations uncover and act upon financial insights.

10 AI Analytics Myths, Demystified

Here's how analytics and business leaders can gain a better picture of AI’s strengths and weaknesses when it comes to analytics uses.